Introduction

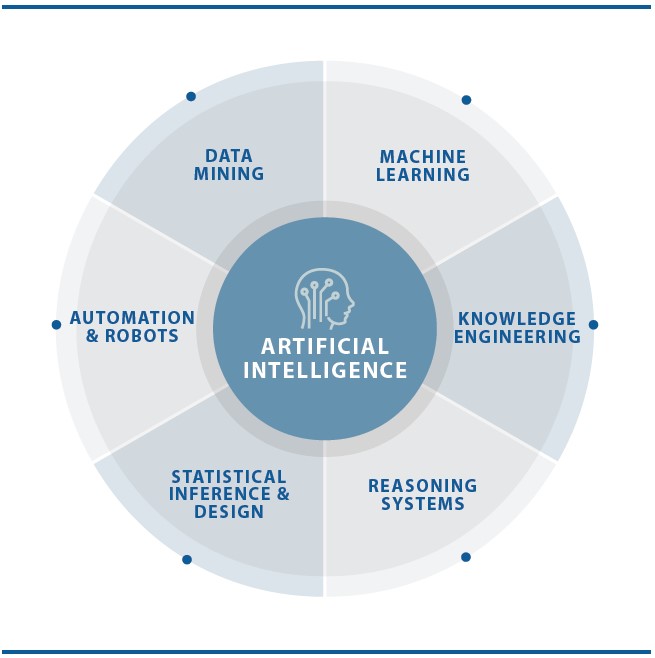

Air warfare both involves and is shaped by technology. The technologies used bound the possible actions air forces can potentially take, both empowering and constraining force employment options. Given this, emerging major new technologies always attract great interest and today this is focused on artificial intelligence (AI). For the foreseeable future, this is narrow AI technology, not general. Narrow AI equals or exceeds human intelligence for specific tasks within a particular domain. In contrast, general AI equals the full range of human performance for any task in any domain. General AI appears several decades away (Gruetzemacher, 2019).

The global military interest for the near-to-medium term is in how narrow AI technologies could be employed in the modern battlefield. Such AI can be applied in multifarious ways and may be considered as a general purpose technology that as, in wider society, will become pervasive and incorporated into most military machines (Trajtenberg, 2018). This paper restricts its gaze to considering AI in decision-making and in particular in air warfare. The article initially discusses the technology, before noting operational constructs and finishing with considering three alternative approaches for AI and machine learning assisted decision-making in air warfare.

Technology Matters

Modern AI has evolved to meet the needs of the commercial domain and especially consumers. A key advance was when low cost Graphics Processing Units (GPUs) became readily available, mainly to meet video gaming demand. With their massive parallel processing, GPUs can readily run machine learning software. Machine learning is an old concept but it needed the combination of GPUs and access to ‘big data’ troves to make practical and affordable on a large scale.

In machine learning, the computer’s algorithms not external human computer programmers, creates the sequence of instructions and rules that the AI then uses to solve problems. In general, the more data used to train the algorithm the better the rules and instructions devised. Given this, AI with machine learning can potentially teach itself while ‘on the job’, getting progressively better at a task as it steadily gains more experience in it.

In many cases, this data comes from a large-scale network of interconnected devices that collect information from the field and then transmit this through a wireless ‘cloud’ into a remote AI computer for processing. In the military sector, the Internet of Battlefield Things (IoBT) network features fixed and mobile devices, including drones able to collaborate with each other in swarms. Such IoBT networks allow remote sensing and control but generate vast amounts of data. A way around this is to connect the network to an edge device that can assess the data in real-time, forward the most important information into the cloud and delete the remainder, thereby saving on storage and bandwidth.

Most edge computing is now done using AI chips. These are physically small, relatively inexpensive, use minimal power, and generate little heat allowing them to be readily integrated into handheld devices such as smartphones and non-consumer devices such as industrial robots. Even so, in many applications AI is used in a hybrid fashion: some portion on-device and some remotely in a distant fusion centre accessed via the cloud.

Operational Constructs

Several important operational concepts are emerging relevant to future air warfare. Operations are moving from being joint into now being multi-domain, that is across land, sea, air, cyber and space. The intent under a follow-on concept called “convergence” is that friendly forces should be able to attack hostile units across and in any domain (Wesley 2020, 4-5). For example, land units will now be able to engage ships at sea, air forces attack space assets and cyber everywhere, simultaneously and in contested environments.

Such an operational concept abandons the traditional, single domain linear kill chains to embrace multi-domain ones that leverage alternate or multiple pathways. The emerging associated “mosaic” construct envisages the data flow across the large IoBT field creating a kill web, where the best path to achieve a task is determined and used in near real-time. The use made of the IoBT field is then fluid and constantly varying, not a fixed data flow as the older kill-chain model implies. The outcome is that the mosaic concept provides highly resilient networks of redundant nodes and multiple kill paths (Clark 2020). This cross domain thinking is now evolving further into notions of “expanded maneuver” (Vergun 2021).

The complexity of implementing these interlocking operational concepts against peer adversaries during a major conflict is readily apparent. To make multi-domain operations involving convergence, mosaic and expanded maneuver operations practical requires the use of automated systems using AI with machine learning.

In the near-to-medium term, AI’s principal attraction for decision making involving such complex constructs will its ability to quickly identify patterns and detect items hidden within the large data troves collected by the IoBT. The principal consequence of this is that AI will make it much easier to detect, localize and identify objects across the battlespace. Hiding will become increasingly difficult and targeting much easier. On the other hand, AI is not perfect. It has well known problems in being able to be fooled, in being brittle, being unable to transfer knowledge gained in one task to another and being dependent on data (Layton, 2021).

AI’s warfighting principal utility then becomes ‘find and fool’. AI with its machine learning is excellent at finding items hidden within a high clutter background however, in being able to be fooled, lacks robustness. The ‘find’ starting point is placing many low cost IoBT sensors in the optimum land, sea, air, space and cyber locations in those areas across which hostile forces may transit. A future battlespace might feature hundreds, possibly thousands, of small-to-medium stationary and mobile AI-enabled surveillance and reconnaissance systems operating across all domains. Simultaneously, there may be

an equivalent number of AI-enabled jamming and deception systems acting in concert trying to create in the adversary’s mind a false and deliberately misleading impression of the battlefield.

Alternative Decision-Making Options

The AI and machine learning decision-making options possible will be influenced both by the technologies and the needs of the desired operational concepts. The alternatives discussed here are to use technology to be able to react to an adversary’s actions much faster, to get in front of the adversary through technology driven pre-emption, or to slow adversary decision making down significantly.

Option 1: Hyperwar

AI offers up visions of war at machine speed. John Allen and Amir Husain see AI allowing hyperwar where: “The speed of battle at the tactical end of the warfare spectrum will accelerate enormously, collapsing the decision-action cycle to fractions of a second, giving the decisive edge to the side with the more autonomous decision-action concurrency” (Allen, 2017).

In the case of air warfare decision-making, the well-known Observe-Orient-Decide Action (OODA) model provides a useful framework to appreciate this idea. The model’s designer, John Boyd, advocated making decisions faster so as to get inside the adversary’s decision-making cycle. This would disrupt the enemy commander’s thinking, create a seemingly menacing situation and hinder their adaption to a now too-rapidly changing environment (Fadok, 1997).

In the ‘Observe’ function, AI would be used for edge computing in most of the IoBT’s devices then again in the central command centre that fused the incoming IoBT data into a single comprehensive picture. For ‘Orient’, AI would play an important part in the battle management system (Westwood 2020, 22). AI would not only produce a comprehensive near-real time air picture but also predict the enemy air courses of action and movements.

The next AI layer handling ‘Decide’ in being aware of friendly air defence units availability would pass to the human commander for approval a prioritised list of approaching hostile air targets to engage, the optimum types of multi-domain attack to employ, the timings involved and any deconfliction considerations. Humans would remain in-the-loop or on-the-loop control as necessary, not just for law of armed conflict reasons but as AI can make mistakes and needs checking before any irreversible decisions are made. After human approval, the ‘Action’ AI layer would assign the preferred weapons to each target passing the requisite targeting data automatically, ensure deconfliction with friendly forces, confirming when the target was engaged and potentially ordering weapon resupply.

Option 2: Beyond OODA

AI technology is rapidly proliferating making it likely both friendly and adversary forces will be equally capable of hyperwar. The OODA model of decision making may then need to change. Under it, an Observation cannot be made until after the event has occurred; the model inherently looks backwards in time. AI could bring a subtle shift. Combining suitable digital models of the environment and the opposing forces with high-quality ‘find’ data from the IoBT, AI could predict the range of future actions an adversary could conceivably take and from this, the actions the friendly force might best take to counter these.

An AI and machine learning assisted decision making model might then be ‘Sense–Predict–Agree–Act’: AI senses the environment to find adversary and friendly forces; AI predicts what adversary forces might do in the immediate future and advises on the best friendly force response; the human part of the human–machine team agrees; and AI acts by sending machine-to-machine instructions to the diverse array of AI-enabled systems deployed across the battlespace. Under this decision-making option, friendly forces would aim to seize the initiative and act before adversary forces do. It is a highly calculated form of on-going, tactical level pre-emption.

AI will make it much easier to detect, localise and identify objects across the battlespace. Hiding will become increasingly difficult and targeting much easier.

– Dr. Peter Layton

Option 3: Stop Others Decision-Making

An alternative to trying to make friendly force decisions faster is to try to slow the adversary’s decision-making down. In air warfare an attacker needs considerable information about the target and its defences to mount successful air raids.

To prevent this, AI-enabled ‘fool’ systems could be dispersed across the battlespace, both physically and in cyberspace. Small, mobile, edge computing systems widely dispersed could create complicated electronic decoy patterns by transmitting a range of signals of varying fidelity. These systems might be mounted on drones for the greatest mobility, although uncrewed ground vehicles using the road network may also be useful for specific functions such as pretending to be mobile SAM systems. The intent is to defeat the adversary’s ‘find’ systems by building up a misleading or at least a confused picture of the battlefield.

AI-enabled ‘fool’ systems may also be used in conjunction with a sophisticated deception campaign. For example, several drones all actively transmitting a noisy facsimile of the electronic signature of friendly force fighters could take off when they do. With seemingly, very large numbers of fighter aircraft suddenly airborne, the adversary will be unsure which are real or not.

Conclusion

The three options present real choices in terms of decision-making. Perhaps at odds with initial perceptions, the hyperwar concept is most likely to involve a series of multi-domain salvo or spasm attacks rather than a continuous flowing action. Physical constraints mean that it would take time to rearm, refuel and reposition own-force machines for follow-on attacks.

On the other hand, the beyond OODA option, can be much more of a continuous action as it is effectively following a detailed plan albeit informed by IoBT battlespace sensing. Such a decision-making construct might suit an active defence that absorbed the first attack, learnt from it, and then attacked in a predetermined manner. Given AI’s processing speeds the response would be determined immediately before being launched, allowing the greatest value to be made from AI’s ‘on the job’ machine learning.

Lastly, the stopping others decision-making option offers great promise for defenders but requires a good knowledge of the adversary in terms of both the surveillance and reconnaissance systems in use and the cognition of the humans involved. It seems best suited for frozen conflict situations where the ‘fool’ systems can be optimally placed, the environment very well understood and a single adversary is faced. This option may be less suitable for forces that deploy into distant combat zones quickly and have only a limited comprehension of the situation.

The option preferred will depend on the context but highlights that not all using AI in a conflict may use the same technology in the same way, even in the narrow area of decision-making. There is no doubt that AI will significantly change air warfare decision-making and importantly, in the near term. The choice for each air force today is which way is best for them. Now is the time to start thinking deeply about the issue.

References

Allen, J.R., and Husain, A. (2017). ‘On hyperwar’, USNI Proceedings Magazine, vol.143, no. 7.

Clark, B., Patt, D., Schramm, H., (2020). Mosaic Warfare Exploiting Artificial Intelligence And Autonomous Systems To Implement Decision-Centric Operations, Center for Strategic and Budgetary Assessments, Washington.

Fadok, D.S. (1997). John Boyd and John Warden: Airpower’s Quest for Strategic Paralysis, pp. 357-398 in Phillip S. Meilinger (ed.), The Paths of Heaven The Evolution of Airpower Theory, Air University Press, USAF Maxwell Air Force Base.

Gruetzemacher, R., Paradice, D. and Lee, K.B. (2019). Forecasting transformative AI: an expert survey, Computers and Society: Cornell University. Available from: https://arxiv.org/abs/1901.08579 [16 September 2021].

Layton, P. (2021). Fighting Artificial Intelligence Battles: Operational Concepts for Future AI-Enabled Wars, Joint Studies Paper Series No. 4, Department of Defence, Canberra.

Trajtenberg, M. (2018). AI as the next GPT: a political-economy perspective, National Bureau Of Economic Research, Cambridge.

Vergun, D. (2021). DOD Focuses on Aspirational Challenges in Future Warfighting, DOD News, Available from: https://www.defense.gov/Explore/News/Article/Article/2707633/dod-focuses-on-aspirational-challenges-in-future-warfighting/#DCT [17 September 2021].

Wesley, E.J., and Simpson, R.H. (2020). Expanding the battlefield: an important fundamental of multi-domain operations, Association Of The United States Army, Arlington. Westwood, C. (2020), 5th Generation Air Battle Management, Air Power Development Centre, Canberra.